A raster file seemed more adequate and I wanted something like this, but with a much higher resolution (and ideally already georeferenced)

I found the perfect data-source from Global Forest Watch: https://www.globalforestwatch.org/

Global Forest Watch provide an incredible dataset called "Tree Cover (2000)" that has a 30x30m resolution which includes the density of tree canopy coverage overall.

It's too good to be true, right?

Well, in a sense yes. The main problem is that it's just too much data and you can't download the image as a whole.

Alternatively, they provide you an interactive map where you can download each section separately, at: http://earthenginepartners.appspot.com/science-2013-global-forest/download_v1.6.html

|

| https://storage.googleapis.com/earthenginepartners-hansen/GFC-2018-v1.6/Hansen_GFC-2018-v1.6_treecover2000_50N_010W.tif |

So, three challenges:

- How to download all images

- How to merge them together to a single file

- (Optional, but recommended) Reducing the resolution a bit to make it more manageable

1. How to Download all images

Well, doing it manually is definitively an option, although it's probably easier to do it programmatically.

import ssl

import urllib.request

ctx = ssl.create_default_context()

ctx.check_hostname = False

ctx.verify_mode = ssl.CERT_NONE

sections = []

for i in range(8,-6,-1):

for j in range(-18,18):

sections.append(f'{abs(i)*10}{"N" if i >= 0 else "S"}_{str(abs(j)*10).zfill(3)}{"E" if j >= 0 else "W"}')

for section in sections:

url = 'https://storage.googleapis.com/earthenginepartners-hansen/GFC-2018-v1.6/' + \

f'Hansen_GFC-2018-v1.6_treecover2000_{section}.tif'

with urllib.request.urlopen(url, context=ctx) as u, open(f"{section}.tif", 'wb') as f:

f.write(u.read())

The code above, in Python 3.x, iterates all the grid squares, prepares the proper download url and downloads the image.As the https certificate isn't valid you need to turn off the ssl checks, hence the code at the beginning.

2. How to merge them together to a single file

It's actually quite simple, but you'll need GDAL for that, hence you'll need to install it first.

gdal_merge is incredibly simple to use:

gdal_merge.py -o output-file-name.tif file1.tif file2.tif fileN.tif

Adding to those parameters I would suggest compressing the output, as otherwise an already large file could become horrendously huge.

gdal_merge.py -o output-file-name.tif <files> -co COMPRESS=DEFLATE

And that's it. I'll show how this all ties together on the Python script in the end, but you can "easily" do it manually if you concatenate the 504 file names on this command.

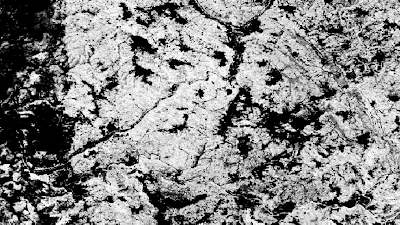

3. Reducing the resolution a bit to make it more manageable

As I've mentioned, the source images combined result in lots and lots of GBs, which I currently don't have available on my machine. Hence, I've reduced the resolution of each image.

Please note that this isn't simply a resolution change on a Graphics Software, as it needs to preserve the geospatial information. Again, GDAL to the rescue, now using the gdalwarp command:

gdalwarp -tr 0.0025 -0.0025 file.tif

The first two parameters represent the pixel size. From running the command gdalinfo on any of the original tifs I can see that the original pixel size is:

Pixel Size = (0.0002500000000000,-0.0002500000000000)

Empirically I've decided to keep 1/10th of the original precision, hence I've supplied the aforementioned values (0.0025 -0.0025)

As before, I would suggest compressing the content

gdalwarp -tr 0.0025 -0.0025 file.tif -co COMPRESS=DEFLATE

You do lose some quality, but it's a trade-off. If you have plenty of RAM + Disk Space you can keep an higher resolution.

|

| Original |

|

| 1/10th of resolution |

The following Python 3 does everything in one go. The interesting bit is that I change the resolution of each individual tile before merging the complete map. The script also cleans up after itself, only leaving the final tif file, named "treecover2000.tif"

import ssl import urllib.request import os ctx = ssl.create_default_context() ctx.check_hostname = False ctx.verify_mode = ssl.CERT_NONE extension = ".small.tif" sections = [] for i in range(8,-6,-1): for j in range(-18,18): sections.append(f'{abs(i)*10}{"N" if i >= 0 else "S"}_{str(abs(j)*10).zfill(3)}{"E" if j >= 0 else "W"}') for section in sections: print(f'Downloading section {section}') url = 'https://storage.googleapis.com/earthenginepartners-hansen/GFC-2018-v1.6/' + \ f'Hansen_GFC-2018-v1.6_treecover2000_{section}.tif' with urllib.request.urlopen(url, context=ctx) as u, open(f"{section}.tif", 'wb') as f: f.write(u.read()) os.system(f'gdalwarp -tr 0.0025 -0.0025 -overwrite {section}.tif {section}{extension} -co COMPRESS=DEFLATE') os.system(f'rm {section}.tif') os.system(f'gdal_merge.py -o treecover2000.tif { (extension + " ").join(sections)}{extension} -co COMPRESS=DEFLATE') os.system(f'rm *{extension}')The "treecover2000.tif" ends-up with 751MB and looks AWESOME. Zooming in on Portugal, Spain and a bit of France